Transforming scheduling for researchers: from discovery interviews to insights to action

UXR, ProductContext

As a senior product manager of a new cross-functional team of engineers, a designer, and a data analyst, I facilitated collaboration within the core team and with our stakeholders in customer success, marketing, sales, operations, UX research, and more. Together, we worked to improve our product, a platform for user researchers to streamline study logistics and participant recruitment and management.

Why

The problem

Scheduling flows were one of the biggest areas of technical and design debt in our product, and we had the customer feedback to show for it. At the company, no team had touched scheduling in over five years, so we only had a surface level understanding of user needs and complaints. In that time, many general automated scheduling products had become popular, and our outdated system, with its manually selected time blocks, didn’t live up to the current industry standards. Our team charter was to improve engagement and retention in these scheduling flows.

Leadership and stakeholders were excited to finally have a team tackle the challenge of scheduling. There was a hope that we would totally overhaul the scheduling functionality and have a “big splash” marketing moment.

Research goals

As we kicked off the new team to improve the scheduling experience, our research goals were:

- What are users’ needs when managing scheduling for a moderated research project?

- What are common use cases when managing scheduling for a moderated research project?

- What are the biggest pain points in users’ current scheduling workflows (inside or outside our product)?

Anticipated artifacts

We expected to produce several artifacts:

- Identify and list users’ key needs for scheduling

- Identify most common use cases for scheduling

- Identify and list pain points for scheduling

Anticipated decisions or actions:

We expected the artifacts to inform decision-making:

- Share use cases with cross-functional stakeholders, especially customer-facing teammates, to get their feedback and begin to establish shared language

- Prioritize pain points (opportunities) and choose the first one to begin addressing with design and development work

- Conduct a story mapping exercise with the designer, tech lead, and analyst

- Shape the designer’s lo-fi explorations for our long-term scheduling vision

Approach

Method

Since we were starting with a limited understanding of our users needs and pain points in this area, we wanted to start with exploratory research to gain a broad understanding of user needs, build empathy for researchers’ challenges in their end-to-end workflows (inside and outside of our product), develop hypotheses, and the stage for more focused research later. To achieve this, we decided to conduct half-hour 1:1 interviews via video calls, with the option for participants to screen share with us. This method allowed us to dive deep into researchers’ experiences and gain insights to inform the direction of the project.

Recruitment

In previous research, we had already established two archetypal roles on a research team: an individual contributor researcher (IC Researcher), who typically conducted research under the title of researcher, product designer, product manager, marketer, etc, and a research operations manager (ReOps Manager), who typically oversaw a research program and was focused on enabling research. In this project, we wanted to talk to people in both roles. We expected the scheduling needs of IC Researchers and ReOps Managers to be overlapping but distinct.

Based on an informal look at written customer feedback about scheduling, we also had a hypothesis that more complex research - such as with more teammates involved, many participants, multiple sequential sessions, or multiple studies running in parallel - resulted in more complaints. Therefore, we wanted to target folks that worked on both simple projects and complex projects.

We were able to segment our existing participant pool easily based on roles. We had some data that about project complexity, such as total number of participants or total collaborators on a project, but we planned to better understand project complexity through our interviews.

We targeted ten total sessions. We completed interviews with four IC Researchers, five ReOps Managers, and had one no-show. We didn’t backfill the no-show because we were already seeing strong themes emerge and prioritized moving quickly.

Moderator guide

We designed our interview questions to elicit story-telling about recent experiences scheduling participants for a moderated research study. Participants were encouraged to walk us through their workflow, step by step, without asking them about any specific tools.

A sample of our interview questions were:

- Learning goals: learn context about how researchers conduct moderated studies

- What’s your current role? What does your team look like?

- Learning goals: understand scheduling-specific needs, workflows, and pain points.

- Can you tell me about the last moderated study you did?

- How many people on your team attended each session? What was the primary reason that each person attended the session?

- Can you show or talk me through how you scheduled research sessions for that project?

- What was the first step you took to begin the scheduling process? (Etc encouraging them to take us through a sequence of actions.) What was the hardest part of this process?

- Can you tell me about the last moderated study you did?

For the full moderator guide, see Appendix A.

Findings

What are users’ needs when managing scheduling for a moderated research project?

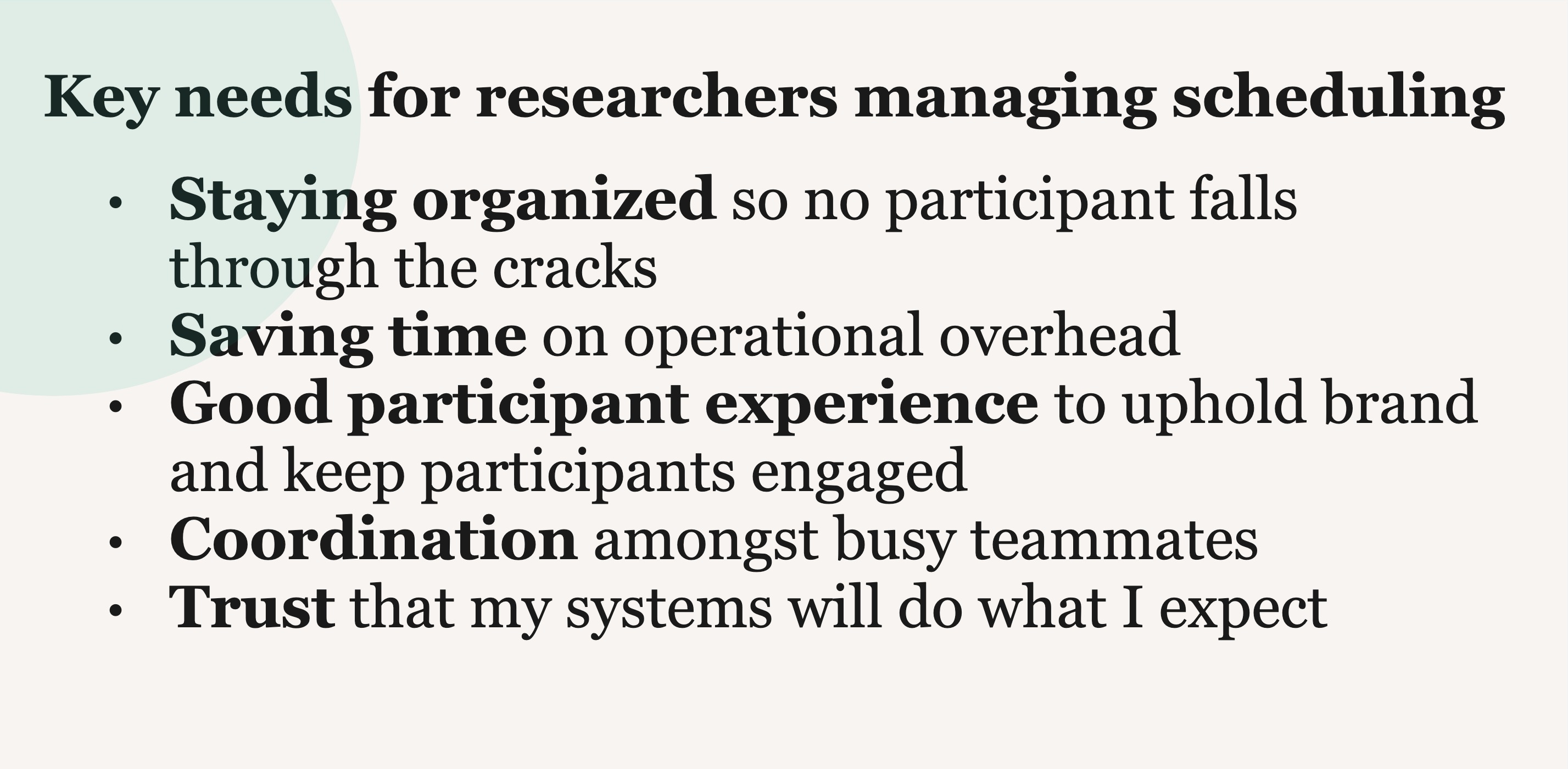

Insight: Researchers’ key needs are organization, time savings, participant experience, coordination, and trust.

We heard that organization, time savings, participant experience, coordination, and trust were important to researchers. These needs became a through line in our designer’s explorations.

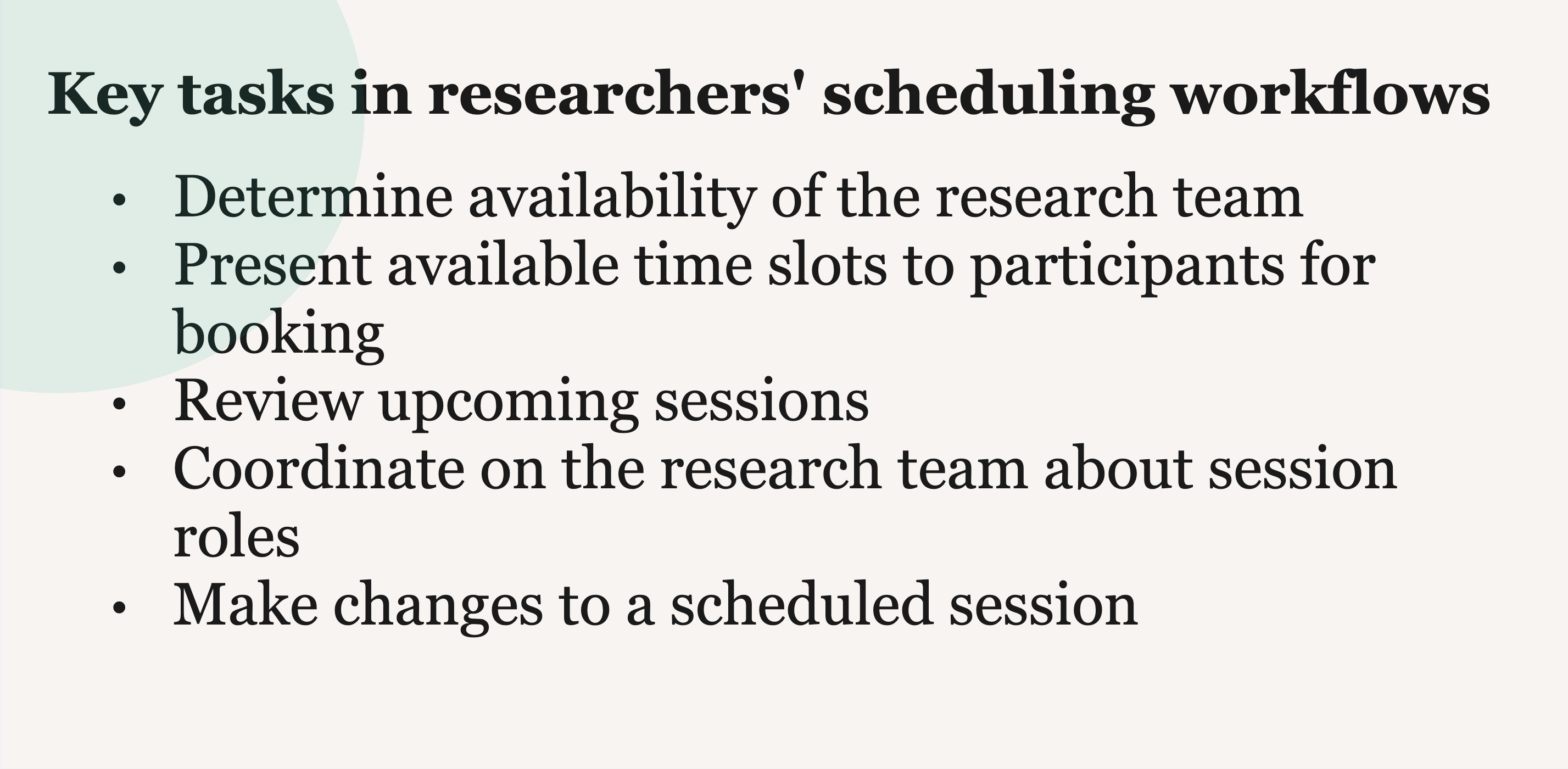

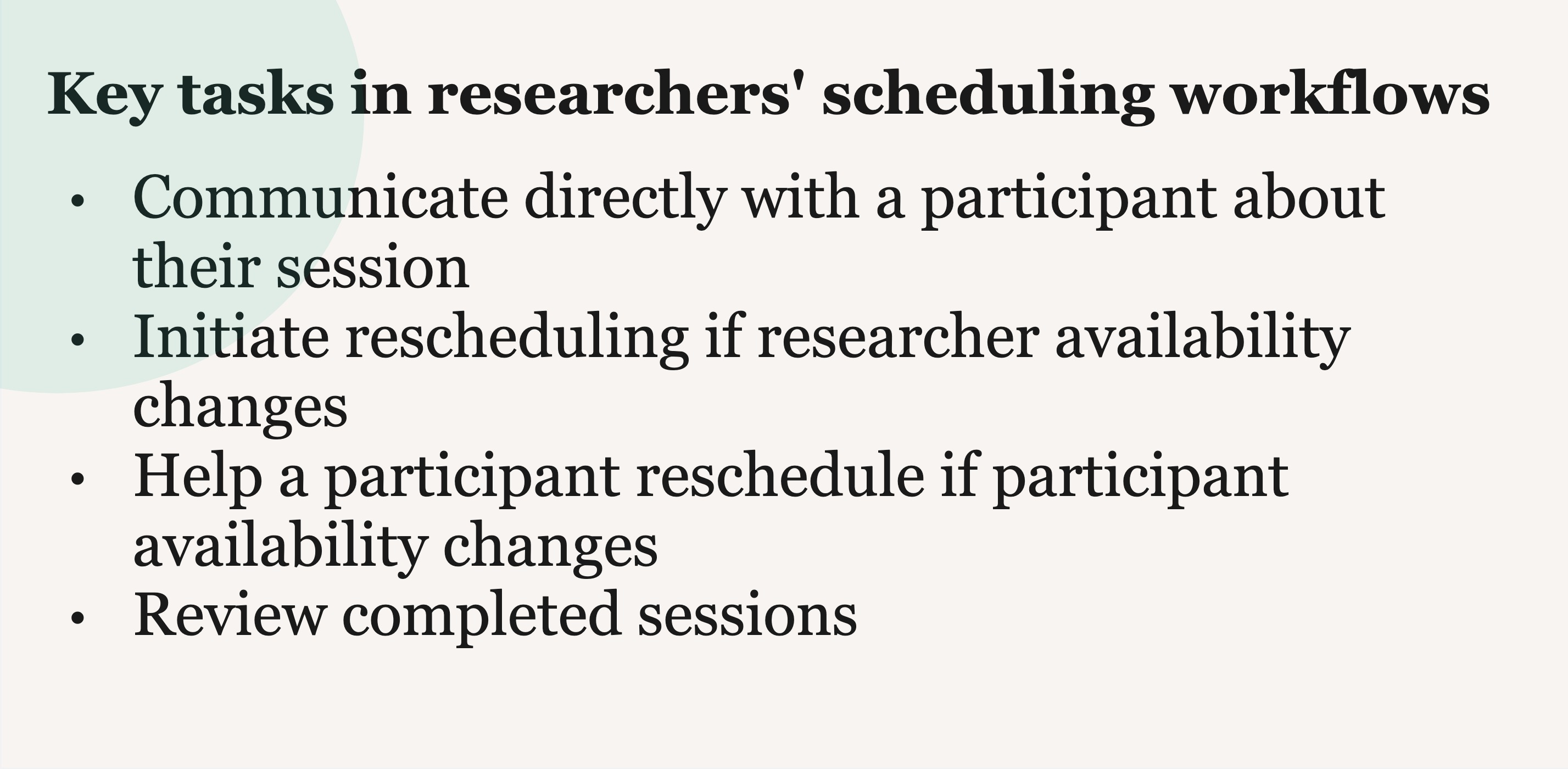

Insight: We identified a list of researchers’ key tasks.

We also built out a list of common tasks that researchers undertook when managing the scheduling of a moderated research study.

This list of tasks became input into a story mapping exercise (see below).

What are common use cases when managing scheduling for a moderated research project?

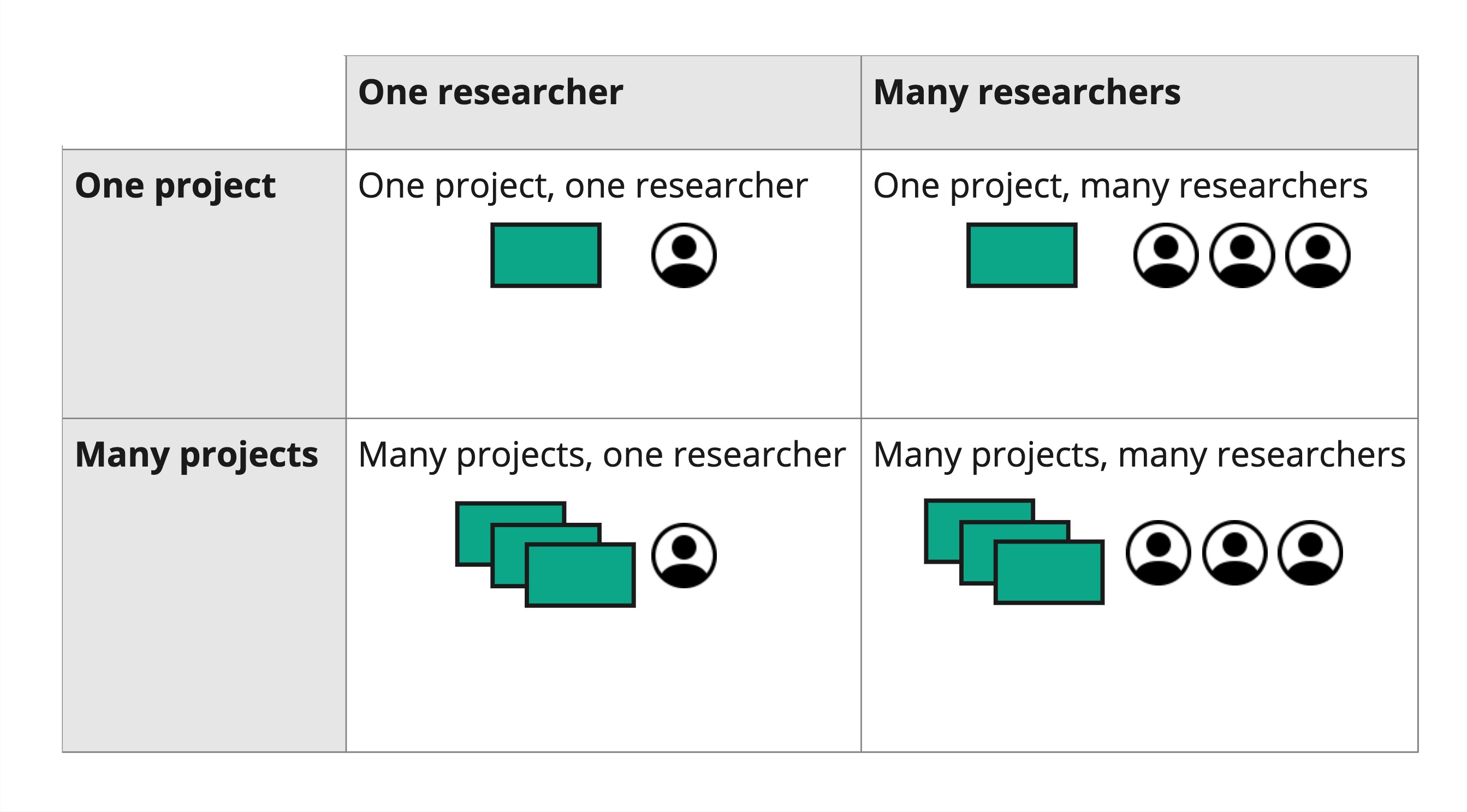

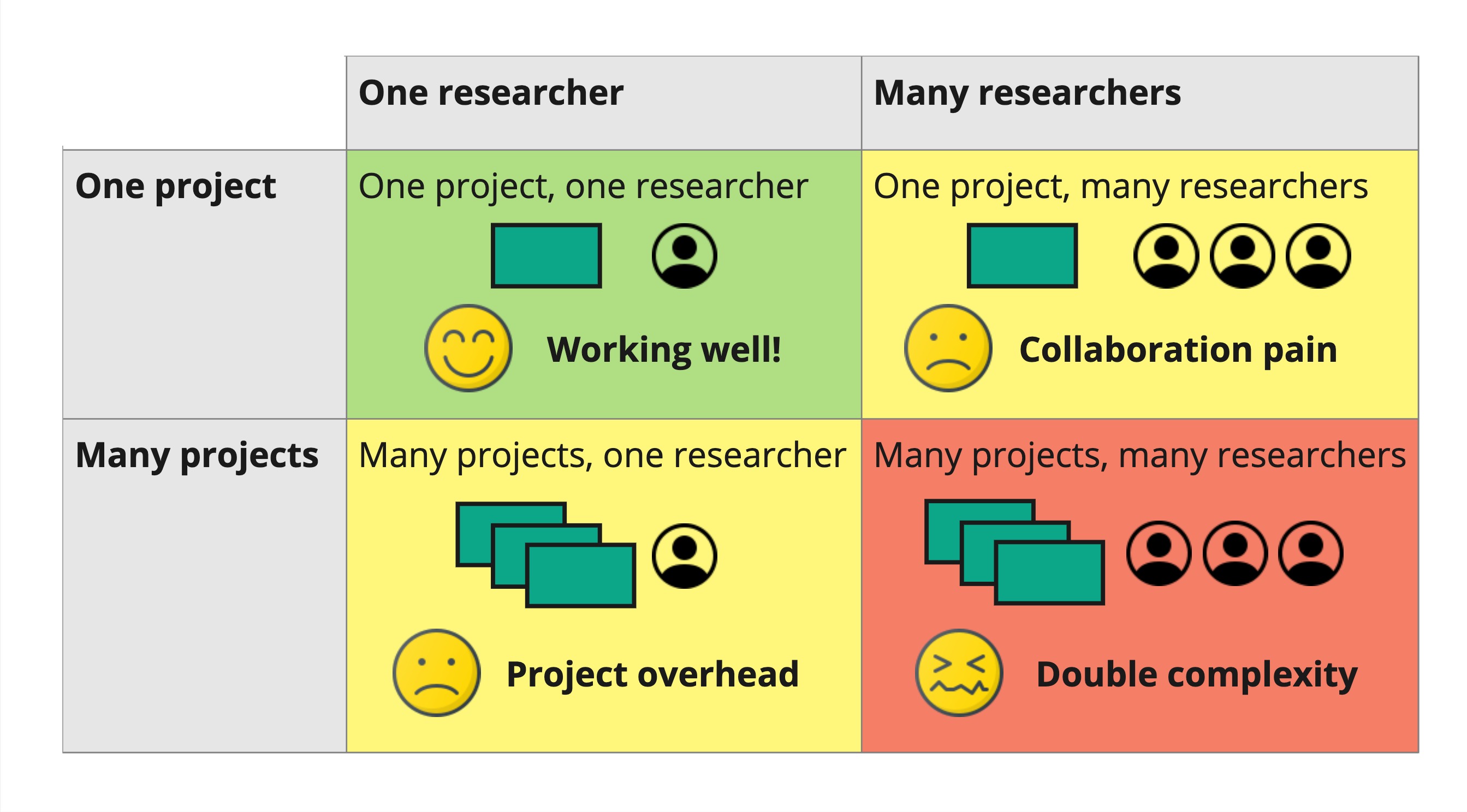

Insight: We identified four use cases based on one or many researchers working on one or many projects.

From our research, there emerged four use cases based on a researcher working alone vs with many researchers, and on a single project vs many projects:

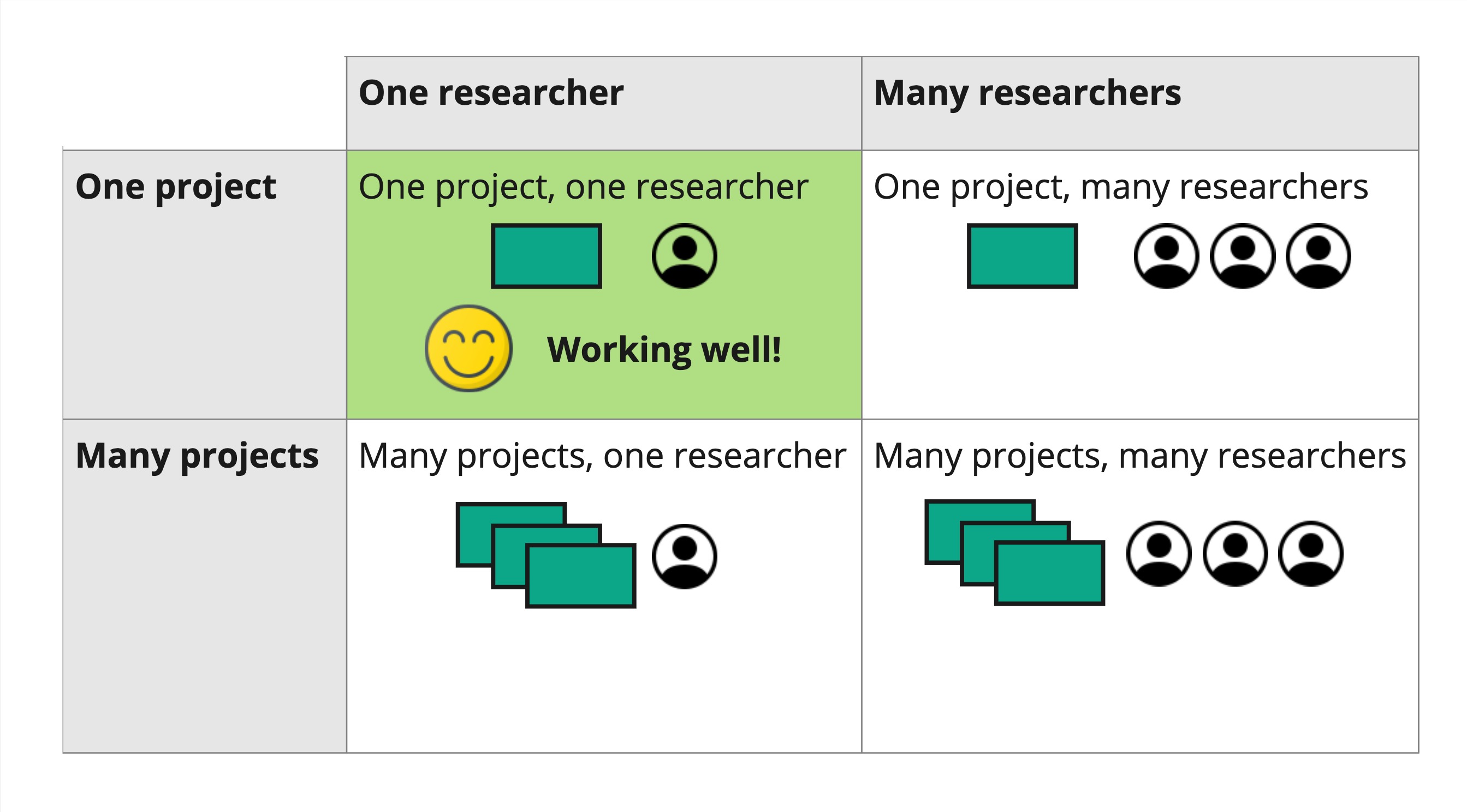

Insight: Our product was meeting the needs of one use case well.

Entering the research, we held a hypothesis that researchers universally disapproved of the scheduling flows and wanted our tool to mimic the latest automated scheduling platforms (e.g. Calendly or SavvyCal). However, our analysis revealed a surprising finding: we were wrong! Contrary to our initial assumptions, there was one use case where our manual scheduling flows were already meeting researchers’ needs: a single researcher working on a single project didn’t need automation.

For the other three use cases, we did hear a lot of pain points about coordinating research with multiple teammates and/or running multiple studies at the same time. Researchers ran into more challenges as the complexity of their projects grew.

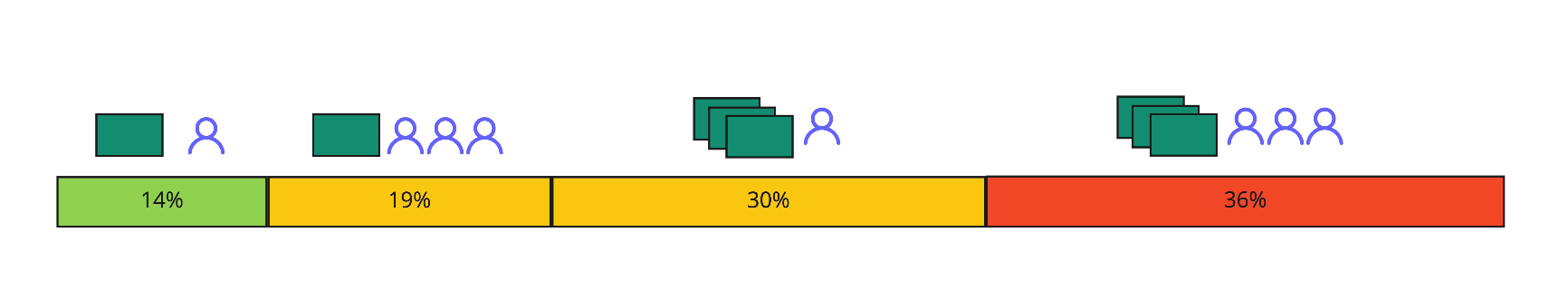

After our interviews, we looked at usage data to understand how often these use cases occurred. We found that the simplest use case represented only 14% of projects. Improving the experience for the three complex use cases would make a big impact a larger portion of our users.

What are the biggest pain points in users’ current scheduling workflows (inside or outside our product)?

Insight: Researchers had anxiety about the rescheduling function.

We were surprised to notice high anxiety about rescheduling flows. It became evident that researchers had a general distrust in the scheduling tool’s reliability due to usability issues, poor discoverability, missing functionality, and bugs. And the stakes were high! Issues could cost them valuable time spent on no shows and 1:1 messaging with participants, lose them qualified participants, damage their brand with a bad participant experience, and make them show up frazzled and stressed to a session with last-minute scheduling difficulties.

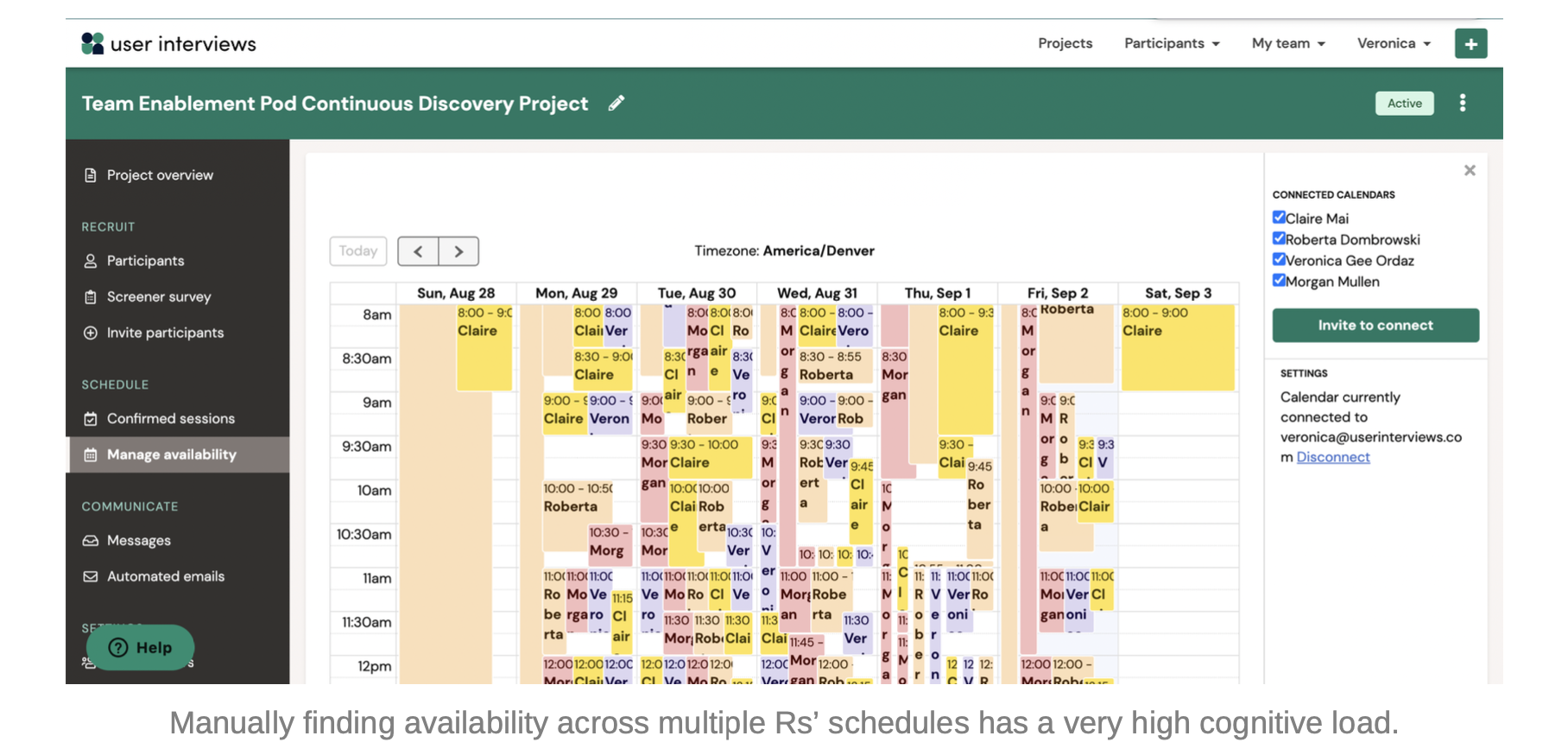

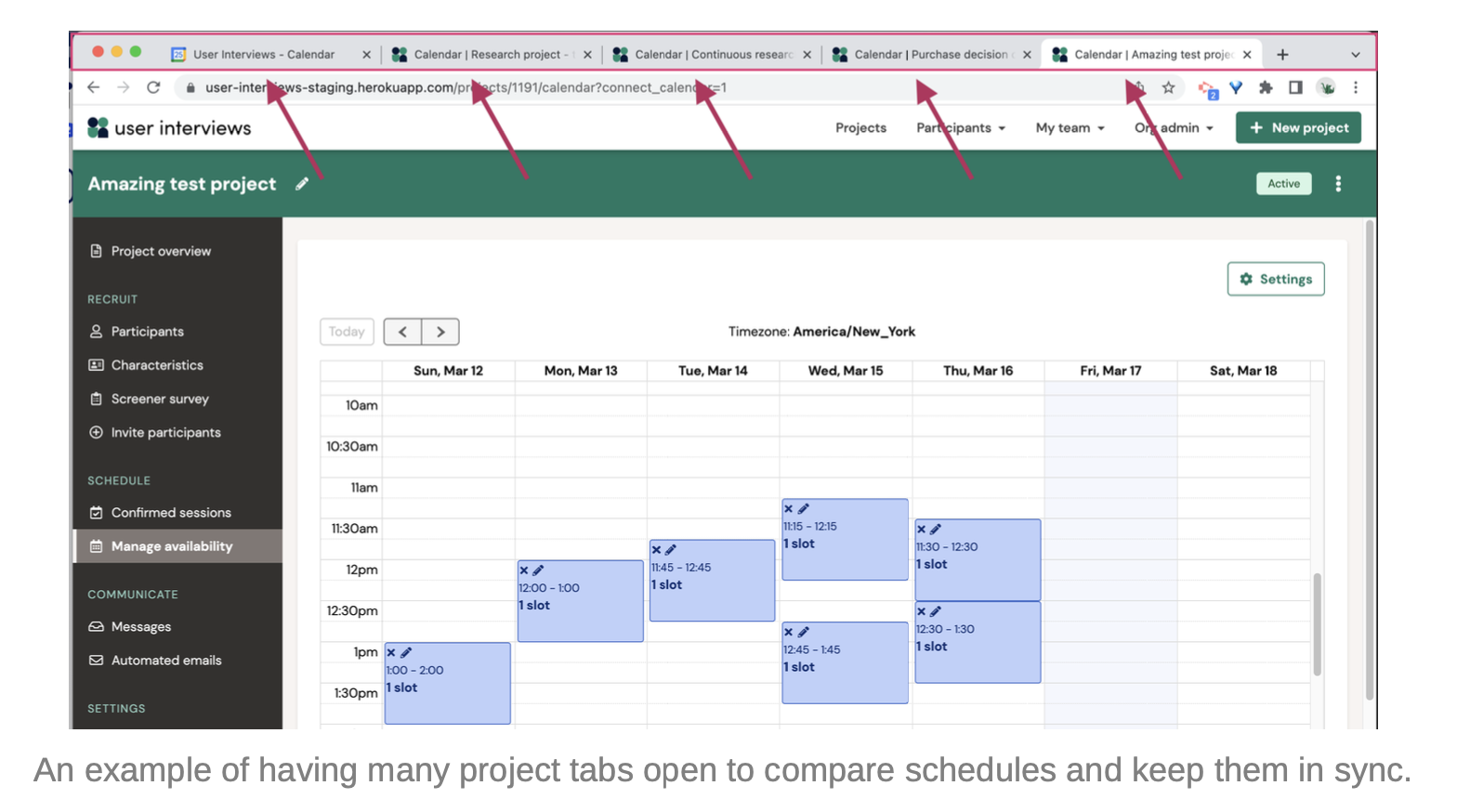

Insight: Collaboration was a huge pain point.

Based on our own experience using our product, we weren’t surprised that collaboration came up over and over again as a pain point.

In addition to usability issues in the existing functionality, much of what researchers needed to do wasn’t supported. For example, a researcher might want to rotate moderator roles between two teammates and pair each moderator with a note-taker from a pool of four teammates. Many researchers had workflows outside of our product, such as in spreadsheets, other calendar apps, and even paper systems, to keep track of who was doing what.

Insight: Managing multiple projects was a pain point. In some cases, the root cause was the lack of quota functionality.

While we were aware that there was a demand for support for quotas (grouping participants under different recruitment criteria within a project) in our platform, our research revealed an interesting workaround employed by some researchers. Instead of having dedicated support for quotas, researchers resorted to creating separate projects for each quota. Although this workaround addressed the immediate need for quotas, it compounded challenges in scheduling management because each project had separate scheduling settings.

Impact

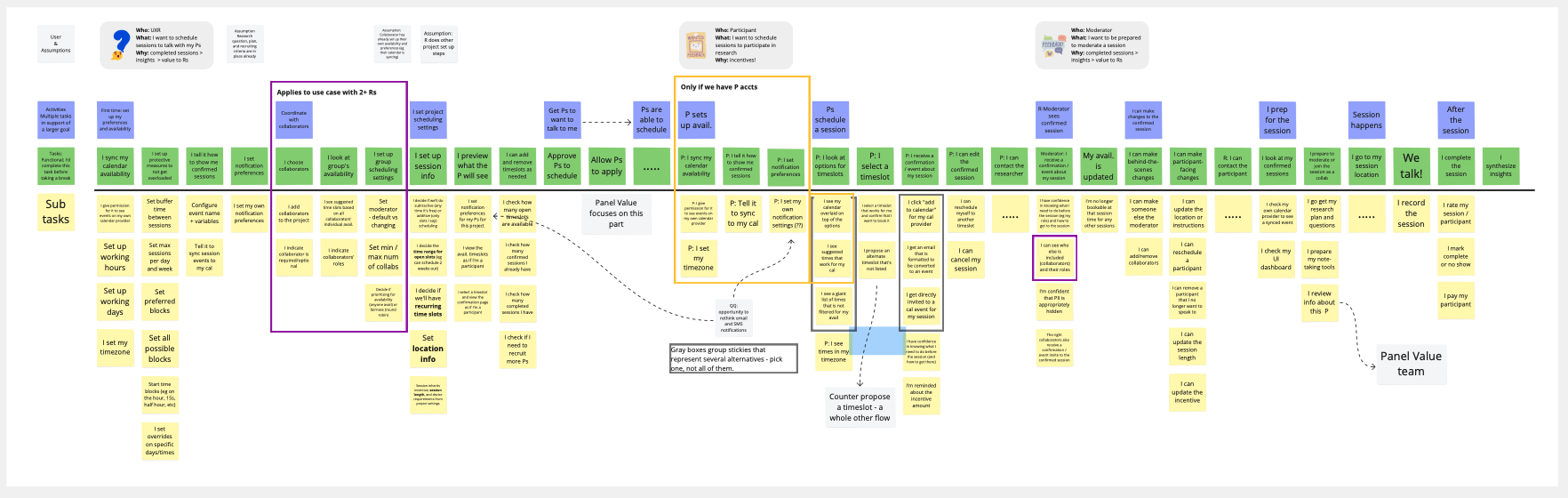

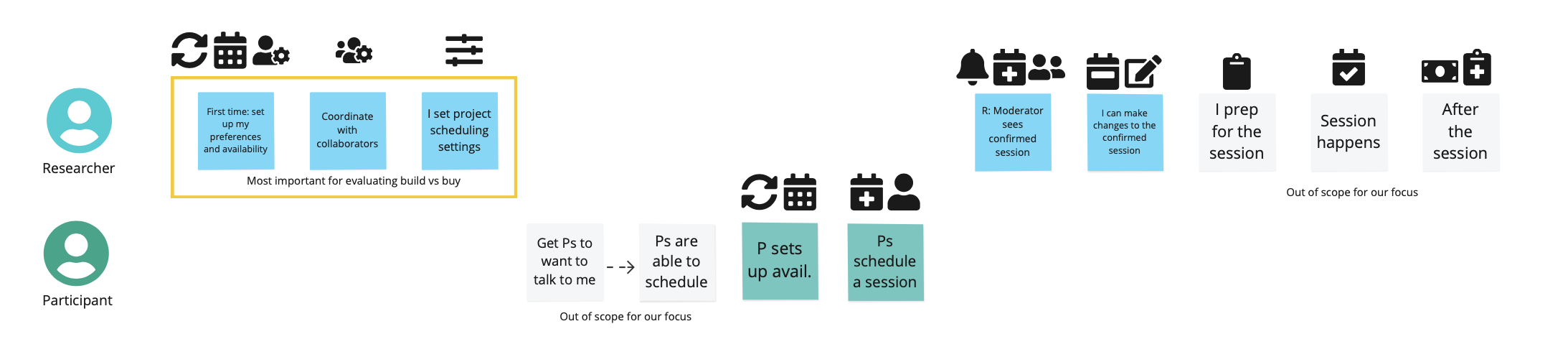

We did a story mapping exercise to socialize mental models for scheduling.

We used researchers’ key tasks as input for a story mapping exercise that helped us understand non-linear paths between actions and how different actors relied on each other to progress through the journey. As we built out the model, we brought in more stakeholders to provide different perspectives and help us iterate on it. Eventually, we socialized our mental models for scheduling workflows across the company in order to build shared language and context.

In-depth story mapping for scheduling workflows.

Shorthand story mapping for scheduling workflows.

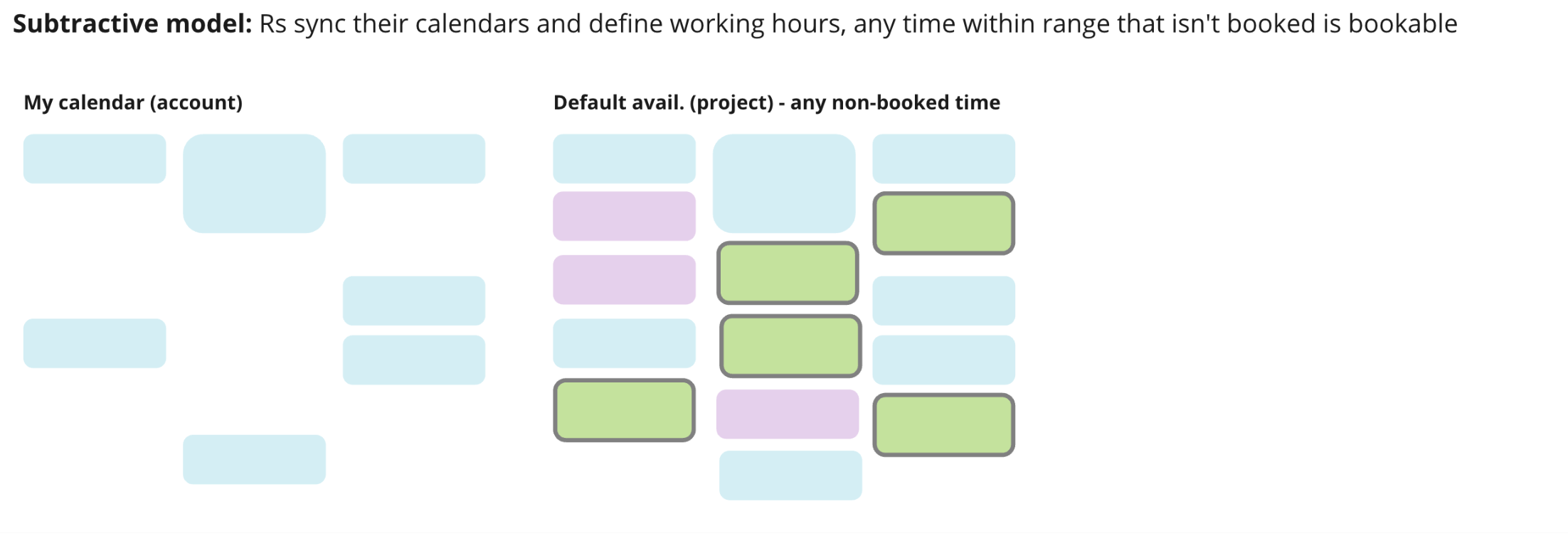

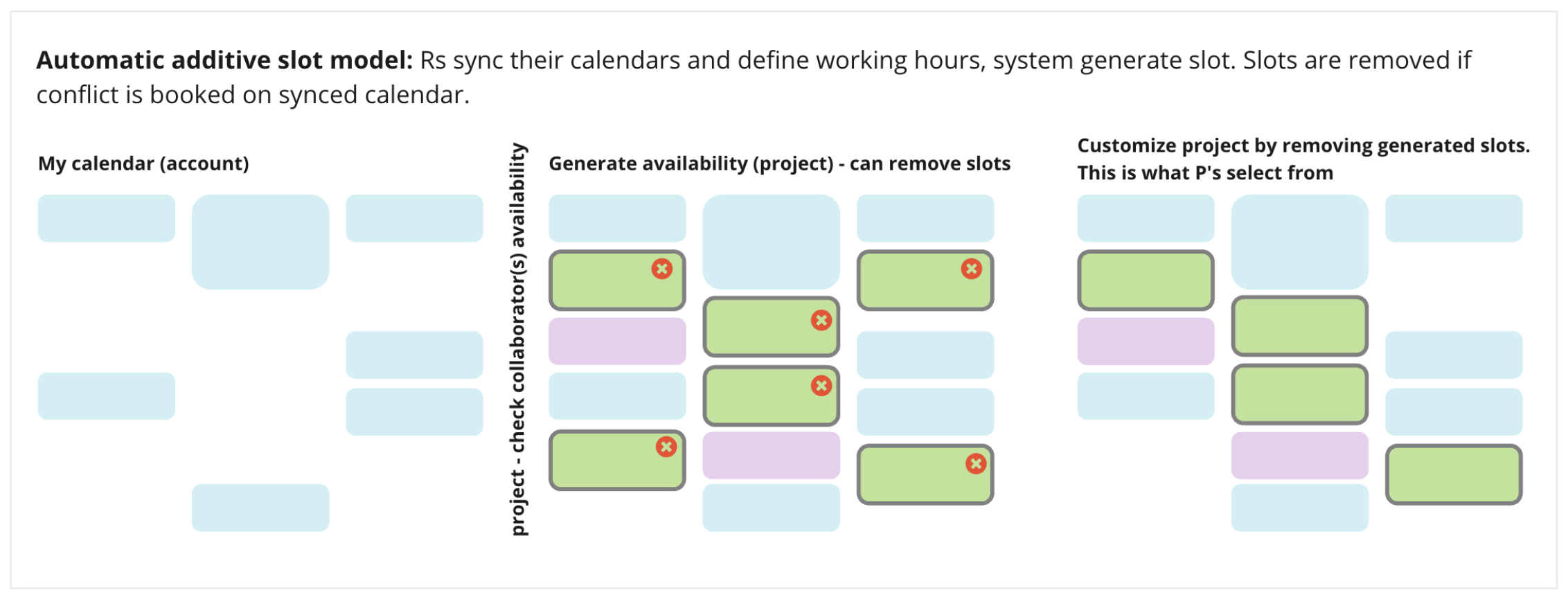

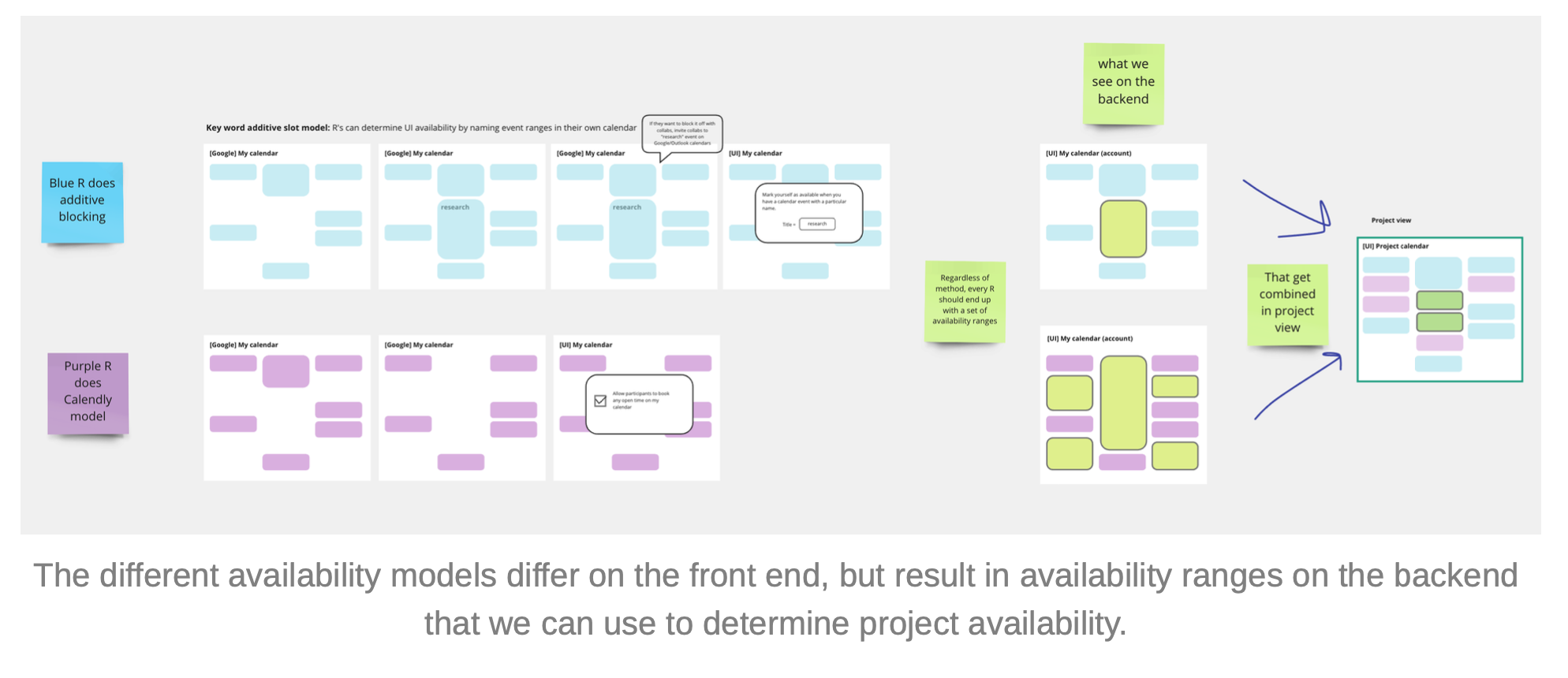

We did a design deep dive on availability models.

Our designer did a deep dive exploration on availability models, which were far more complex than we had expected. Because of the complexity, as well as the importance of getting it right, we did additional concept testing before moving on to higher-fidelity designs.

An availability model that subtracts busy time from working hours to determine availability.

We changed our approach to designing a solution from fully automated to a hybrid model.

Our initial assumption was that our universally problematic manual scheduling workflows would need to be completely replaced by new automated workflows. However, the insight that some researchers were happy with the current system prompted us to pause and reconsider our approach. The framework for our use cases gave us shared language to discuss options with stakeholders, as well.

Despite the low percentage of users that were happy with manual scheduling, stakeholders agreed that it was an important gateway use case into growing product usage in an organization. In these conversations, we also learned from our customer-facing stakeholders that some companies had strict security policies about calendar syncing - which we had missed in our initial stakeholder conversations. Having a great experience for manual scheduling was important for these accounts to get activated quickly, even if calendar syncing hadn’t yet been approved.

After these discussions, our availability model deep dive, concept testing, iterations and prototyping with the technical team, we eventually arrived at a mixed model with much higher confidence that in our solution.

We prioritized rescheduling flows as our first milestone.

Discovering that rescheduling flows were a huge pain point led us to make a strategic shift in our team’s roadmap: instead of staring with core scheduling flows, we proposed starting with low-hanging fruit in rescheduling flows. This would build back trust with researchers by showing them we were fixing long-time pain points, and avoid big marketing fanfare about new features that lead right back to bad experiences in rescheduling. It would also tighten the design scope for our first milestone, give our engineers a chance to become familiar with a new technical landscape, and help the team build some momentum before tackling the bigger, harder challenges ahead.

This strategic pivot significantly improved researcher efficiency and satisfaction, while also laying a solid foundation for future enhancements.

We built a case for quotas… in the long-term.

Despite considering whether or not to pursue quotas as the root cause of some pain points, our team ultimately opted to uphold our commitment to our original charter: improving scheduling processes. While supporting quotas and more complex scheduling would both benefit more advanced research teams, we believed that scheduling would make it easier for researchers to invite more collaborators to observe and/or support their studies and therefore expand usage within an organization.

However, our research findings underscored the pressing need for quota support among our users. Leveraging this insight, we collaborated with other product teams to consolidate related research findings and advocated to build quotas into the medium- and long-term product strategy. This strategic alignment helped us stay responsive to user needs while also keeping us focused on our core objective.

Takeaways

Our discovery research into this new problem space not only oriented our team and stakeholders to researchers’ needs, common use cases, and current pain points but also provided us with shared language and qualitative evidence to propose changes to our initial roadmap assumptions. Instead of starting with core flows, we began with rescheduling, acknowledging the importance of addressing immediate pain points. Similarly, rather than opting for a complete transition from manual to automated scheduling, we developed a more nuanced solution that better accommodated researchers’ diverse needs. Additionally, while we considered pivoting to address quotas, we made the strategic decision to defer that opportunity for future exploration, based on our insights and stakeholder discussions.

While these findings led us to delay our “big splash” marketing release, we were able to design solutions with higher confidence that we understood the problem space and our users’ needs and create a better product. These long-neglected flows had been eroding trust with users, and listening to them and releasing changes that met their needs were important strides to rebuild their trust in the product and foster long-term engagement.

Appendix A: Moderator guide

Intro

Thank you so much for taking the time to talk with me today. My name is NAME, and I’m ROLE on our team. How are you doing today? Is this still a good time for you?

We also have NAME joining us, who is our ROLE. They’re just here to take notes and will remain camera off for the rest of the call. I’ll be asking you some questions to understand your workflows for scheduling participants. As you saw in the sign up process, we record our calls for research purposes only, and only our small team has access to the recordings. Is it alright if I start the recording now? Do you have any questions for me before we start?

Warmup

Learning goals: Get context for their team and how researchers collaborate on projects.

To start off, I’d like to learn a little bit about you and your team.

- Can you tell me about your current role; what do you do?

- How many researchers, or people who do research, are there at your company?

- What does collaboration with them look like day to day?

Scheduling deep dive

Learning goals: Understand scheduling-specific processes and pain points.

- Could you tell me about the last moderated study you ran?

- What were your research goals?

- Could you tell me who you collaborated with?

- How many participants were you hoping to speak with?

- How many did you end up speaking with?

- Who moderated the sessions?

- How many people from your team attended each session?

- What was the primary reason that each person attended the session?

- Can you show or talk me through how you scheduled research sessions for that project?

- What was the first step you took to begin the scheduling process?

- [Etc to get a sense of a sequence of actions. Ask questions to clarify what they’re doing.]

- What was the hardest part of this process?

- What was the easiest part of this process?

Magic wand wishes and wind down

Learning goals: Hear about potential opportunities for the product.

Our time is coming to a close and I’d love to wind down with what I call “Magic Wand Wishes.” If you could make any change to your experience in our product by waving a magic wand, what would it be?

Thank you again for taking the time to connect with our team. Sharing your experience helps us improve the experience in our product for all of our users! As a thank you for your time, you’ll receive an email with a code to redeem a $45 gift certificate.