Driving growth at a healthcare education startup

ProductTL;DR

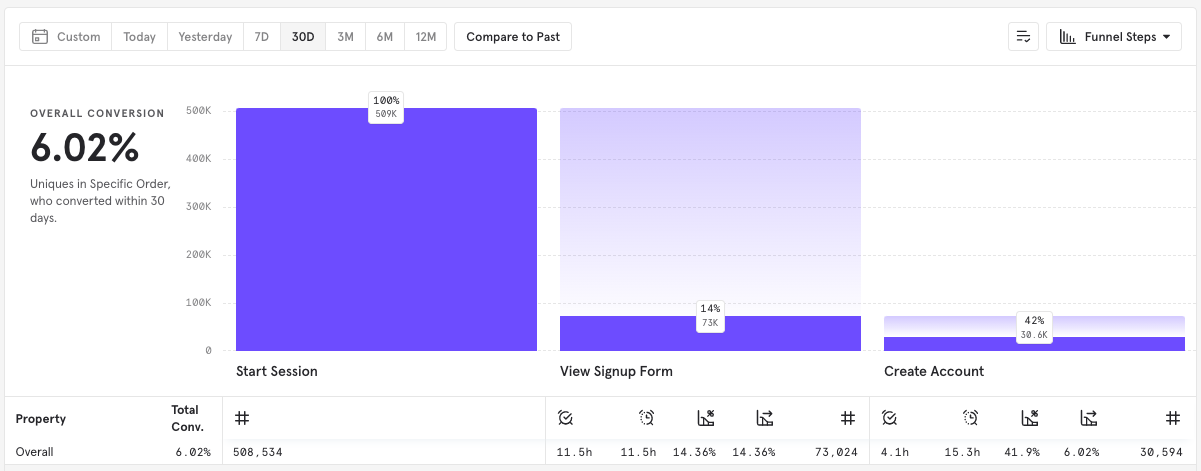

As growth product manager for Osmosis.org, I led a cross-functional team to design and execute a series of A/B tests to increase free trial signup rate. In addition to a 30% increase in signups, the insights from these experiments created the opportunity to pursue larger UX research projects.

Goal and metrics

I was tasked with driving top-of-the-funnel growth. My primary metric was the conversion rate of site visitors that signed up for free trial accounts, a metric that had plenty of room for improvement.

Other KPIs that I monitored closely included first-visit session duration and the conversion rate of free trial users that later purchased a subscription.

Problem framing

Because we were in a resource-scarce and time-sensitive environment, I decided to defer a larger UX research project until we had initial insights and some quick wins to provide direction and credibility. Instead, I convened a cross-functional team to brainstorm incremental, iterative experiments and kept stakeholders involved throughout the project.

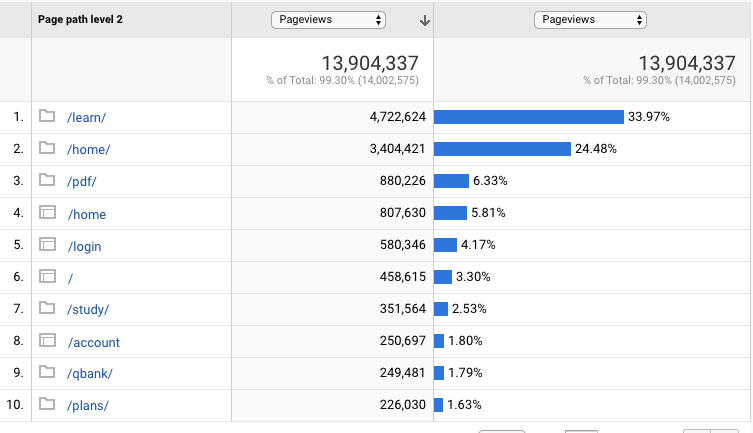

In preparation for an ideation workshop, I gathered background context. By looking at Google Analytics page view data, I narrowed the scope of the problem to the two highest-traffic user flows.

I also pulled a couple of screenshots and Fullstory recordings of real users navigating those user flows.

I summarized the goal, KPIs, usage data, and user interactions in a one-pager that I distributed to representatives from the content, marketing, customer success, engineering, design, and product team.

Cross-functional facilitation

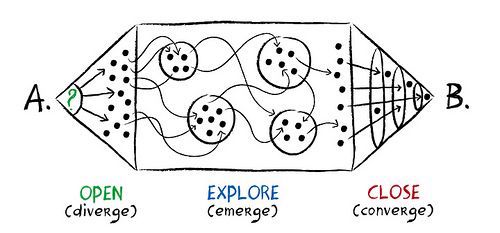

For virtual brainstorming sessions, I often use a diverge-emerge-converge framework and the virtual whiteboarding tool, Miro.

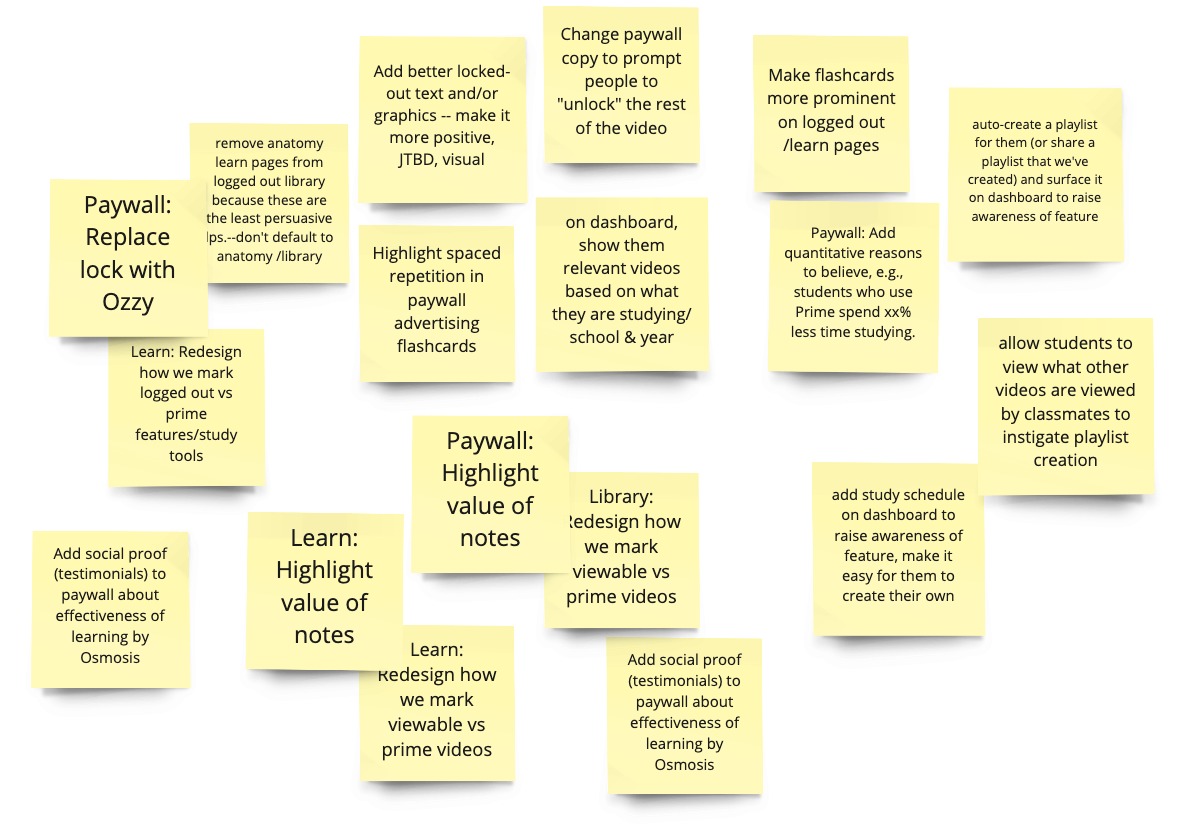

I started by asking participants to add their ideas to the whiteboard individually. This practice helps create divergent ideas and avoid group-think.

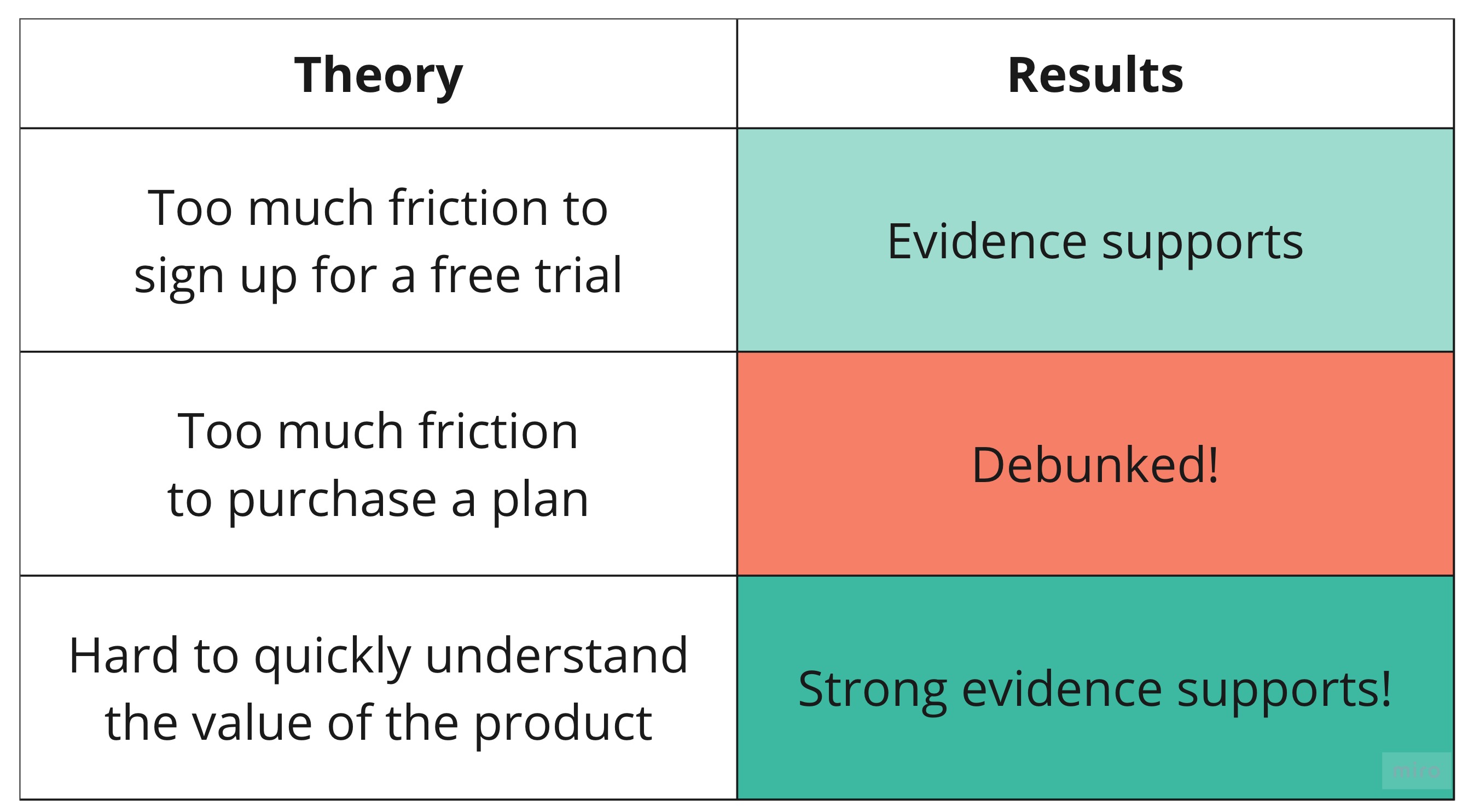

Next, we reviewed the ideas together and grouped them until three main theories emerged:

- There was too much friction to sign up for a free trial.

- There was too much friction to purchase a plan.

- It was too difficult to quickly understand the value of the product.

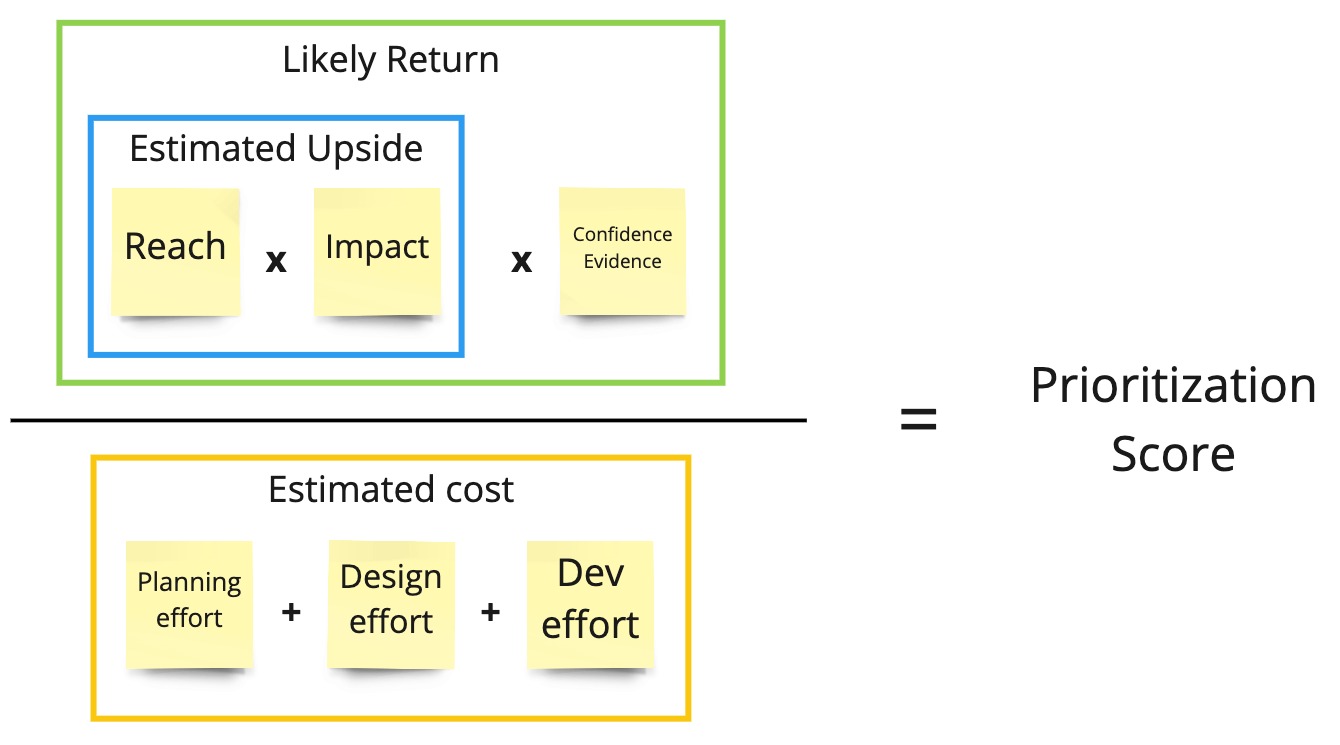

For each theory, we had a collection of experiment ideas that could validate or invalidate the theory. I led the group through a RICE scoring exercise to prioritize high-impact experiments that required lower design and engineering efforts. These exercises left us with a clearly prioritized list of A/B tests.

Execution and Results

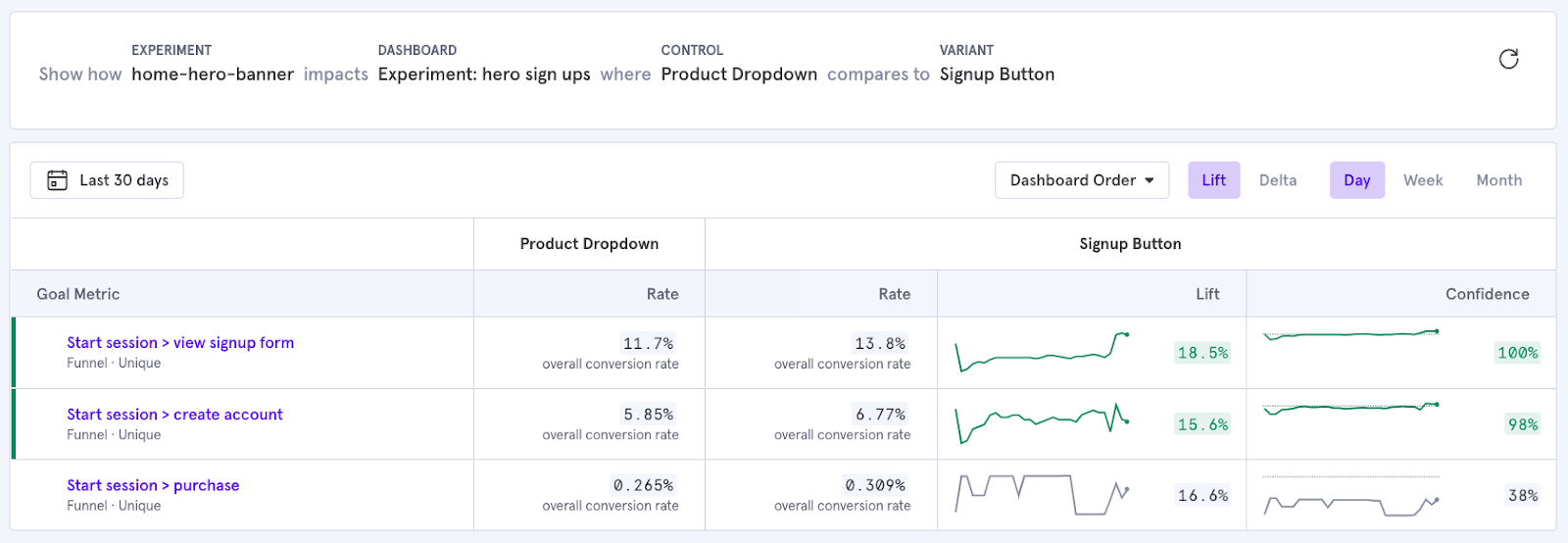

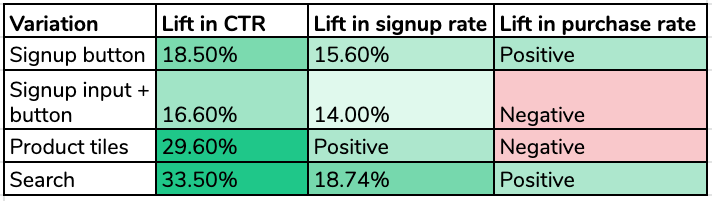

After the session, I collaborated with the engineering and design teams to clarify scope, design experiments, put tracking in place, and run a series of A/B tests.

We ran each experiment variation against a control.

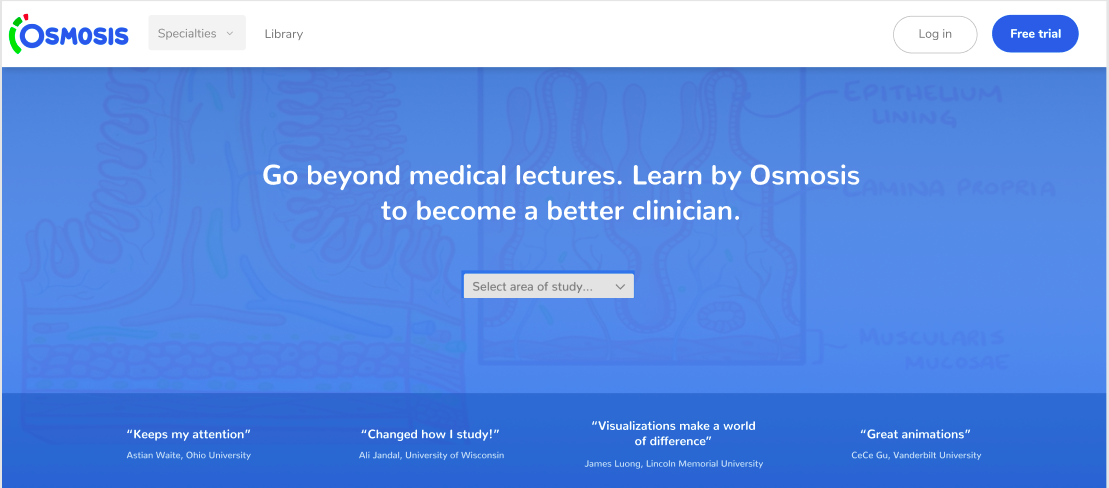

In one experiment, we tested a hypothesis that inviting visitors to explore the site’s content library would demonstrate more value and result in more signups. The experiment replaced the main call-to-action with a content search bar and topic dropdown, actually creating more friction to signup.

However, this counterintuitive move proved fruitful and raised signup rates by over 30%!

Multiple experiments showed strong improvements to conversion rates.

Next Steps

The tests allowed us to quickly get feedback about our theories:

The evidence showed us that our biggest opportunity area was to better demonstrate the value of the product. While showing off our content was one promising way to expose the value, it was only the tip of the iceberg.

This insight made it clear that larger UX research and product discovery efforts were needed to fully unlock the idea’s potential and apply it more broadly throughout the product.